The 2020 Joint Conference on AI Music Creativity was entirely virtual, and live-streamed here.

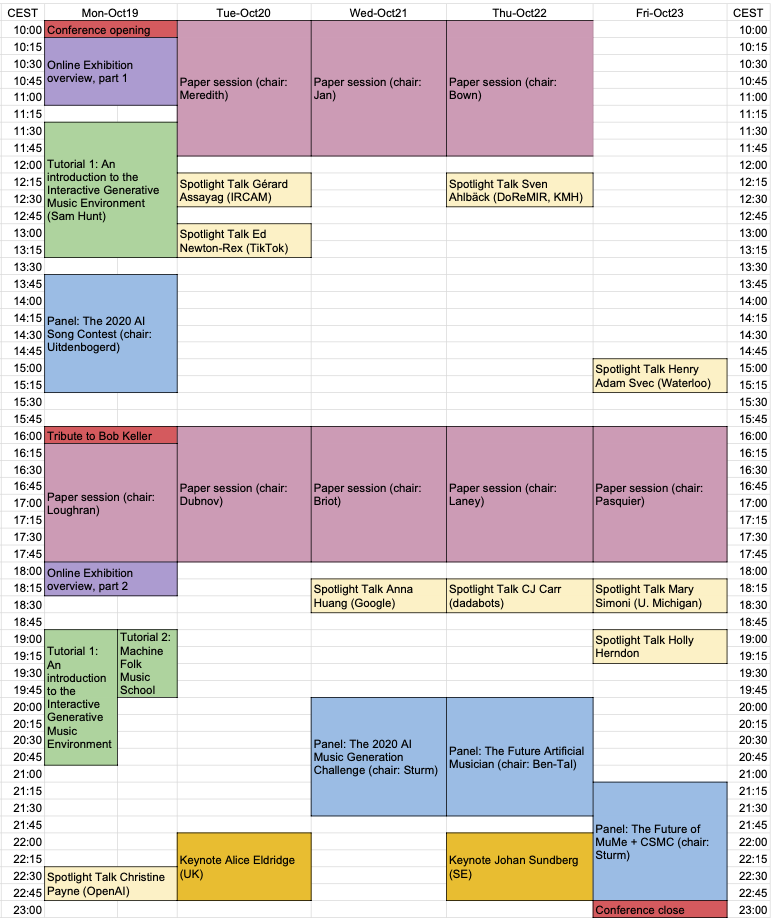

Schedule

Keynotes

(Photo credit: Agata Urbaniak)

Dr. Alice Eldridge, Computer music stimulation of human creativity: techno-musicking as lab for inner and outer worlding video

Various convincing evolutionary theories across science and humanities posit a central role for musicking in the evolution of social, biological and technical patterns of modern humanity. In our contemporary milieu - one of existential, ecological etc. crises - musical experimentation plays a similarly critical role in providing a free and fertile space for making sense of the world, ourselves and our emerging technologies. In this talk I’ll share a range of projects from singing cybernetic homeostats and ecosystemic evolutionary art, through feedback cellos to acoustic biodiversity assessment to illustrate some of the ways that techno-musicking plays a creative role in ways of knowing and making our selves and our worlds.

Dr. Alice Eldridge is interested in how sound organises systems. Her research cross-fertilises ideas and methods from music, cognitive science, technology & ecology to develop biologically-inspired approaches to new musical instruments, and acoustic methods for conservation, within the emerging science of ecoacoustics. She holds a BSc in Psychology, an MSc in Evolutionary and Adaptive Systems and a PhD in Computer Science and AI and is currently Lecturer in Music Technology at the University of Sussex, where she is Co-Director of the Sussex Humanities Lab. Alice has appeared on BBC TV and BBC 4 as a soundscape ecologist; on BBC 3 as a free jazz cellist; on BBC 6 show as a contemporary chamber composer; and on BBC 1 John Peel show as a pop bassist. www.ecila.org

Professor Emeritus Dr. Johan Sundberg, Analysis by synthesis: A wonderful tool in difficult areas video

Analysis by synthesis is a classical tool in many areas of research. I will talk about how I enjoyed using it in three research areas. In one, Björn Lindblom and I applied it in a study of the compositional style in nursery tunes composed by the Swedish composer Alice Tegner in the beginning of the 20th century. A second application was music performance, where musician Larf Frydén, Anders Friberg and I developed a digital performance grammar that controlled a synthesizer; thus, the synthesizer performed music examples in accordance with a set of performance rules. A third area has been the singing voice, the purpose being to explore how various acoustic properties affect the sound of a singer’s voice.

Johan Sundberg (born in 1936, Ph.D. in musicology Uppsala University 1966, doctor honoris causae 1996 University of York, UK) has a personal Chair (Emeritus) in Music Acoustics at the department of Speech Music and Hearing (KTH), Royal Institute of Technology, Stockholm. He early became interested in the acoustical aspects of music, starting with a doctoral dissertation work on organ pipes. After the dissertation, singing voice and music performance have been his main research topics. He led the music acoustics research group from 1970 to 2004. In Musikens Ljudlära (The science of Musical Sounds) Sundberg presents music acoustics in popularized form to the interested layman. In Röstlära (The Science of the Singing Voice) Sundberg explains functional anatomy as well as vocal acoustics. Both texts have been seminal works in the field of voice science.

Spotlights

- Christine Payne, OpenAI Jukebox and MuseNet: Training Neural Nets to Generate New Music video

- Gérard Assayag, IRCAM Co-creativity and Symbolic Interaction video

Improvisation can be seen as a major driving force in human interactions, strategic in every aspect of communication and action. In its highest form, improvisation is a mixture of structured, planned, directed action, and of hardly predictable local decisions and deviations optimizing adaption to the context, expressing in a unique way the creative self, and stimulating the coordination and cooperation between agents. Setting up powerful and realistic human-machine environments allowing co-improvisation necessitates to go beyond state of the art signal processing or reactive systems. We have proposed the expression co-creativity between human and artificial agents in order to emphasize the fact that creativity is a phenomenon of emergence, resulting from cross feed-backs and cross learning processes between complex agents (human, artificial) in interaction. This indeed neutralizes the endless question (and philosophical aporia) of whether artificial entities can be qualified as “creative” by themselves, and shifts the research interest to a more useful approach in our view: promote the conditions of co-creative emergence in cyber-human encounters and put the musician in control of this « machine musicianship ».

- Ed Newton-Rex, TikTok From Lab to Market: Building AI Composition Products video

AI composition has come a long way, and the technology is starting to write convincing music. But to what end? Technology serves little purpose if it isn’t used. In this talk, we look at the range of products that AI composition technology has been applied to, what some of the pitfalls are when moving from research to product development in this nascent field, and what to think about when designing AI composition products.

- Anna Huang, Google Interactive generative models video

How can ML augment how we interact with music? In this talk, I’ll take us on a tour through a series of research projects at Magenta to illustrate how we can design generative models to make music more interactive, accessible and fun for novices, and how these models can also help extend current musical practices for musicians and enable new musical experiences.

- Professor Sven Ahlbäck, KMH + DoReMIR What does AI learn from music?: On the problem of ground truth in music and the power of concepts video

Many AI applications on music relates to music theoretical models, in the sense of musical concepts and ideas of how music works, that are not always made explicit, explicitly discussed or questioned. Already the choice of representations of music and sound as well as the selection of data for training models can influence models and creative output greatly. Here we will give some examples of problems arising when modelling basic musical features, from the experience of developing automatic music notation.

- CJ Carr (dadabots) 24/7 AI Death Metal : Eliminating Humans from Music video

Attention AI Music researcher! Are you trapped in the ivory tower doing 100% research and 0% music? Come down and join the fun. Get a glimpse into what it’s like being a Bot Band circa 2020: neural synthesis, soundcloud bots, viral livestreams, music videos, art exhibits, hackathons, tours, and legal hurdles. You will leave feeling inspired to cold-email your favorite musicians about high-tech collaboration.

- Henry Adam Svec, University of Waterloo “Gonna Burn All My Bridges”: LIVINGSTON’s Artificially Intelligent Folk Songs of Canada and an Alternative Way Forward in A.I. Research video

Decades of discovery in the field of artificial intelligence have prioritized intelligence as the lodestar of research and development. What if folkloristic authenticity was substituted as the guiding goal of our collective labours? In this playful performance, involving both storytelling and song, Henry Adam Svec will explore this and other questions through the recounting of a most unlikely scenario—the time when, in Dawson City, Yukon, he co-invented the world’s first artificially intelligent database of Canadian folksong.

- Professor Mary Simoni, Rensselaer Polytechnic Institute AI Mash-Up: musical analysis, generative music, and big data meets music composition video

This talk will highlight AI algorithms as applied to musical analysis, deep learning and generative music, and the application of COVID-19 data sets to musical composition.

- Holly Herndon Holly Herndon: PROTO artist talk video

Over the last few years Holly Herndon has developed music for her ensemble of human and inhuman vocalists. In this artist talk, she will discuss her unique approach to machine learning and explore it’s limitations as well as opportunities it might reveal for a 21st century approach to music.

Tutorials

- Samuel Hunt (UWE Bristol, UK): This tutorial introduces participants to the Interactive Generative Music Environment (IGME) and demonstrates the ease at which generative music compositions can be created, without domain specific knowledge of either generative music or programming constructs. IGME attempts to bridge the gap between generative music theory and accessible music sequencing software, through an easy to use score editing (or piano roll) interface. IGME is available free of charge and works on Windows and Mac OS. It is hoped that participants will learn about generative music so that they understand how to utilize them in their own composition practice. Link to tutorial.

- Bob L. T. Sturm (KTH, Sweden): This tutorial introduces participants to AI-generated folk music through practice. Two AI-generated folk tunes will be taught aurally and discussed. Participants should be comfortable with their musical instrument of choice and be able to learn by ear (but music notation will be provided). The two tunes will be taught gradually by repeating small phrases and combining them to form the parts. Sturm will lead the session with his accordion. Here’s the tunebook.

Panels

- How Do we Rage with the Machine? Exploring the AI Song Space: Alexandra L. Uitdenbogerd (chair), Hendrik Vincent Koops, Anna Huang, Portrait XO, Ashley Burgoyne, and Tom Collins video

- AI Music Generation Challenge 2020: Bob L. T. Sturm (chair), Jennikel Andersson (judge, fiddle), Kevin Glackin (judge, fiddle), Henrik Norbeck (judge, flute & whistle), Paudie O’Connor (judge, accordion) video

- The Future AI Musician: Oded Ben-Tal (chair), Mark d’Inverno, Mary Simoni, Elaine Chew, and Prateek Verma video

- The Future of MuMe + CSMC: Bob L. T. Sturm, Philippe Pasquier, Oliver Bown, Robin Laney, Róisín Loughran, Steven Jan, Valerio Velardo, Artemi-Maria Gioti (chairs) video

Papers

Published with ISBN 978-91-519-5560-5, DOI: 10.30746/978-91-519-5560-5

MON OCT 19 16-18 CEST (Chair: Róisín Loughran)

Tribute to Professor Robert Keller video

Matthew Caren. TRoco: A generative algorithm using jazz music theory pdf video

Jean-Francois Charles, Gil Dori and Joseph Norman. Sonic Print: Timbre Classification with Live Training for Musical Applications pdf video

Jeffrey Ens and Philippe Pasquier. Improved Listening Experiment Design for Generative Systems pdf video

DEMO Joaquin Jimenez. Creating a Machine Learning Assistant for the Real-Time Performance of Dub Music pdf video

TUE OCT 20 10-12 CEST (Chair: David Meredith)

Rui Guo, Ivor Simpson, Thor Magnusson and Dorien Herremans. Symbolic music generation with tension control pdf video

Zeng Ren. Style Composition With An Evolutionary Algorithm pdf video

Raymond Whorley and Robin Laney. Generating Subjects for Pieces in the Style of Bach’s Two-Part Inventions pdf video

Jacopo de Berardinis, Samuel Barrett, Angelo Cangelosi and Eduardo Coutinho. Modelling long- and short-term structure in symbolic music with attention and recurrence pdf video

WIP Aiko Uemura and Tetsuro Kitahara. Morphing-Based Reharmonization using LSTM-VAE pdf video

DEMO Richard Savery, Lisa Zahray and Gil Weinberg. ProsodyCVAE: A Conditional Convolutional Variational Autoencoder for Real-time Emotional Music Prosody Generation pdf video

TUE OCT 20 16-18 CEST (Chair: Shlomo Dubnov)

Carmine-Emanuele Cella, Luke Dzwonczyk, Alejandro Saldarriaga-Fuertes, Hongfu Liu and Helene-Camille Crayencour. A Study on Neural Models for Target-Based Computer-Assisted Musical Orchestration pdf video

Sofy Yuditskaya, Sophia Sun and Derek Kwan. Karaoke of Dreams: A multi-modal neural-network generated music experience pdf video

Guillaume Alain, Maxime Chevalier-Boisvert, Frederic Osterrath and Remi Piche-Taillefer. DeepDrummer : Generating Drum Loops using Deep Learning and a Human in the Loop pdf video

Yijun Zhou, Yuki Koyama, Masataka Goto and Takeo Igarashi. Generative Melody Composition with Human-in-the-Loop Bayesian Optimization pdf video

WIP Joann Ching, Antonio Ramires and Yi-Hsuan Yang. Instrument Role Classification: Auto-tagging for Loop Based Music pdf video

WED OCT 21 10-12 CEST (Chair: Steven Jan)

DEMO Roger Dean. The multi-tuned piano: keyboard music without a tuning system generated manually or by Deep Improviser pdf video

Stefano Kalonaris and Anna Aljanaki. Meet HER: A Language-based Approach to Generative Music Systems Evaluation pdf video

Mio Kusachi, Aiko Uemura and Tetsuro Kitahara. A Piano Ballad Arrangement System pdf video

Mathias Rose Bjare and David Meredith. Sequence Generative Adversarial Networks for Music Generation with Maximum Entropy Reinforcement Learning pdf video

Liam Dallas and Fabio Morreale. Effects of Added Vocals and Human Production to AI-composed Music on Listener’s Appreciation pdf video

WIP Sutirtha Chakraborty, Shyam Kishor, Shubham Nikesh Patil and Joseph Timoney. LeaderSTeM-A LSTM model for dynamic leader identification within musical streams pdf video

WED OCT 21 16-18 CEST (Chair: Jean-Pierre Briot)

Nick Collins, Vit Ruzicka and Mick Grierson. Remixing AIs: mind swaps, hybrainity, and splicing musical models pdf video

Lonce Wyse and Muhammad Huzaifah. Deep learning models for generating audio textures pdf video

Nicolas Jonason, Bob L. T. Sturm and Carl Thomé. The control-synthesis approach for making expressive and controllable neural music synthesizers pdf video

Mathieu Prang and Philippe Esling. Signal-domain representation of symbolic music for learning embedding spaces pdf video

WIP Foteini Simistira Liwicki, Marcus Liwicki, Pedro Malo Perise, Federico Ghelli Visi and Stefan Ostersjo. Analysing Musical Performance in Videos Using Deep Neural Networks pdf video

THU OCT 22 10-12 CEST (Chair: Ollie Bown)

Amir Salimi and Abram Hindle. Make Your Own Audience: Virtual Listeners Can Filter Generated Drum Programs pdf video

Grigore Burloiu. Interactive Learning of Microtiming in an Expressive Drum Machine pdf video

Germán Ruiz-Marcos, Alistair Willis and Robin Laney. Automatically calculating tonal tension pdf video

Hadrien Foroughmand and Geoffroy Peeters. Extending Deep Rhythm for Tempo and Genre Estimation Using Complex Convolutions, Multitask Learning and Multi-input Network pdf video

DEMO James Bradbury. Computer-assisted corpus exploration with UMAP and agglomerative clustering pdf video

THU OCT 22 16-18 CEST (Chair: Robin Laney)

Shuqi Dai, Huan Zhang and Roger Dannenberg. Automatic Detection of Hierarchical Structure and Influence of Structure on Melody, Harmony and Rhythm in Popular Music pdf video

Brendan O’Connor, Simon Dixon and George Fazekas. An Exploratory Study on Perceptual Spaces of the Singing Voice pdf video

Théis Bazin, Gaëtan Hadjeres, Philippe Esling and Mikhail Malt. Spectrogram Inpainting for Interactive Generation of Instrument Sounds pdf video

WIP Manos Plitsis, Kosmas Kritsis, Maximos Kaliakatsos-Papakostas, Aggelos Pikrakis and Vassilis Katsouros. Towards a Classification and Evaluation of Symbolic Music Encodings for RNN Music Generation pdf video

FRI OCT 23 16-18 CEST (Chair: Philippe Pasquier)

Sandeep Dasari and Jason Freeman. Directed Evolution in Live Coding Music Performance pdf video

Fred Bruford, Skot McDonald and Mark Sandler. jaki: User-Controllable Generation of Drum Patterns using LSTM Encoder-Decoder and Deep Reinforcement Learning pdf video

Hayato Sumino, Adrien Bitton, Lisa Kawai, Philippe Esling and Tatsuya Harada. Automatic Music Transcription and Instrument Transposition with Differentiable Rendering pdf video

WIP Darrell Conklin and Geert Maessen. Aspects of pattern discovery for Mozarabic chant realization pdf

WIP Samuel Hunt. An Analysis of Repetition in Video Game Music pdf video

WIP Gabriel Vigliensoni, Louis McCallum, Esteban Maestre and Rebecca Fiebrink. Generation and visualization of rhythmic latent spaces pdf video